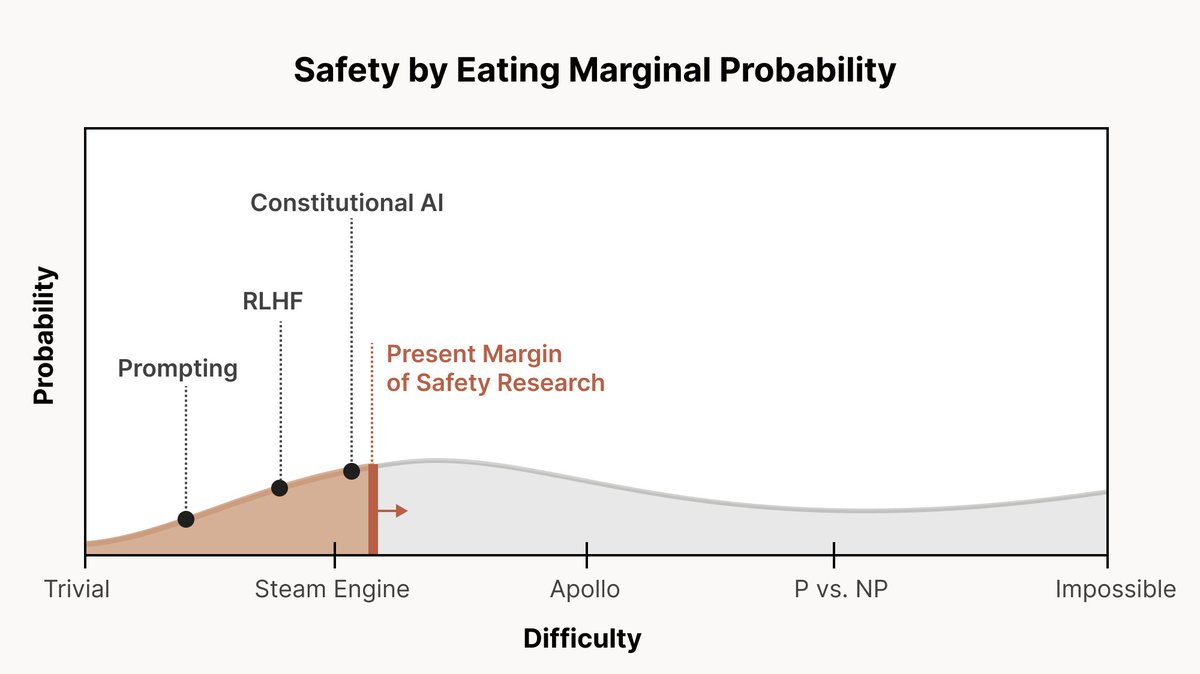

Currently there is simply not enough information available to know with certainty if we live in a world where alignment is easy or difficult. Chris Olah sketched out alignment difficulty on the graph below:

Evaluations, chain-of-thought interpretability, and mechanistic interpretability are important, and many researchers are already advancing them. We believe the left side is well covered by prosaic alignment, but given the current state of knowledge significant probability mass must be given to the difficult side of the graph. We believe that currently the difficult end of the spectrum ('Apollo - P vs NP') is significantly neglected due to low legibility. Our approach is not opposed to but fundamentally complementary to prosaic methods.